The storage systems of almost all corporate IT environments rarely meet the expectations or requirements of its end users, administrators and financial managers. Three major challenges go hand in hand with the acquisition and operation of a storage environment: price, performance and function. There is another way, with virtualised storage clusters and software-defined storage (SDS).

With the introduction of storage area networks (SAN), which are dedicated high-speed networks, the classic data processing landscape was split between server and storage and reconnected via the SAN network. Software-defined storages (SDS) then separated the physical hardware from the storage software. Here, functions such as protecting data, controlling data placement and providing capacity can be controlled and flexibly expanded via the storage software. Without Software Defined Storage, the data storage in the company is managed separately depending on the technology used. Thus, there are different data carriers and systems such as NAS, SAN, local hard disks and other physical data carriers. Different types of data are stored on these with corresponding shares in the network. All data systems act independently of each other and are subject to their own administration. This created the double trilemma.

- The managing directors or CFOs were not happy because these systems were often very expensive to purchase and maintain.

- The technical staff was unhappy because these solutions were either too complex and error-prone in administration or, on the contrary, represented a black box that could hardly be adapted to their own needs.

- The users who worked with the servers and applications also often experienced a deterioration in performance.

Of the three parties involved, often only 1 or 2 groups were happy with the storage environment in the end. For example, the management and the users, because it was relatively cheap and still performant, but IT struggled with the technology. Or IT and also the users were happy, but the CFO had to dig deep into his pocket as it became expensive.

In the following, the three central tasks and challenges for modern storage architectures are described. This applies to large and small storage environments, regardless of the technical requirements, the storage technologies used or even specific hardware manufacturers.

Experience has shown that most storage systems are often difficult and cumbersome to administer. Only a few have graphical user interfaces, they usually cannot be integrated into monitoring systems and the range of functions is also limited. The available hard disk capacities are a large block, it has to be divided into individual inflexible units and these units or LUNs then statically assigned to different servers. This principle is followed by the expensive licensing models of the manufacturers. Each unit and each connection to an accessing system, such as an application server, needs a licence. It does not matter if it is a virtualised VMware server with 100 virtual machines or a single Windows or Linux server, there is no flexibility.

Solution:

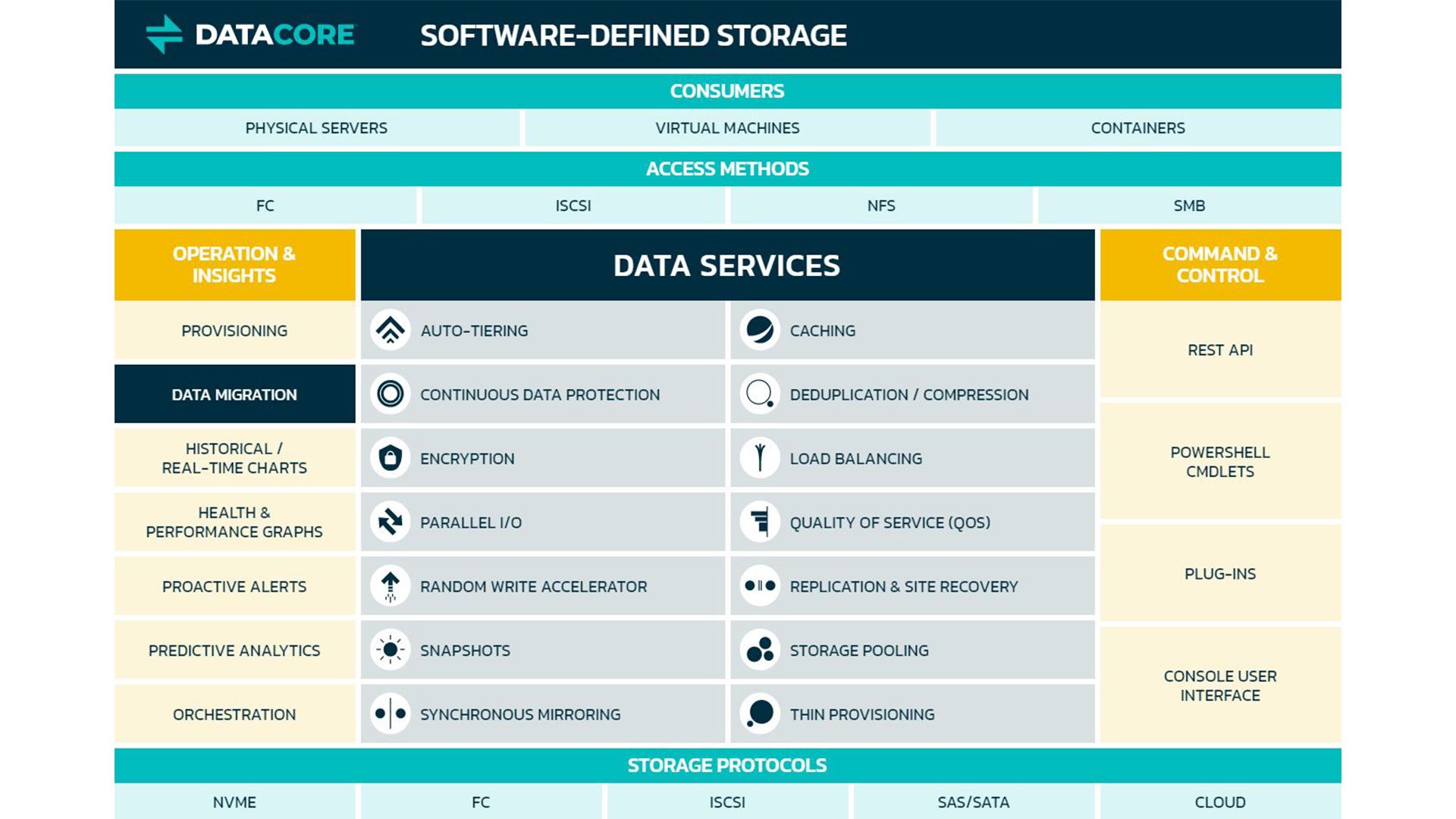

An abstraction layer is introduced, an intermediate layer or software-assisted storage virtualisation. The goal is to be able to manage the large storage blocks efficiently and easily. Intelligent software now controls what was previously cast in hardware. Many functions are now possible and can be continuously developed. Simple user interfaces facilitate the creation and allocation of storage units, reduce complexity and provide a better overview. Island systems, i.e. mostly manufacturer-dependent storage environments (which thus try to defend their "turf"), can suddenly be combined with each other.

Besides, a core requirement, high availability and thus business continuity could be guaranteed in the event of a failure of individual infrastructure components or storage systems. The risk of disrupted business operations due to loss or unavailability of data is massively minimised.

The continuous transparency of this intermediate layer also means that connected servers can work as usual with their (virtualised) hard disks. In addition, it is possible to switch back and forth between different data sources without interruption in the background, as is necessary for maintenance work, for example. Downtimes of IT landscapes are thus a thing of the past.

Replications of data inventories at one's own company location to other fire compartments or to other company locations are also no problem.

Many are concerned that virtualising storage could have a negative impact on performance. Besides availability, fast response times of applications and databases are another core requirement. Slow access speeds are a no-go.

A wide variety of new requirements are imposed for a wide variety of reasons and must be met, tiering of data (cold storage, hot storage), data encryption, snapshots, quality of service, replications & site recovery, thin provisioning and deduplication.

Solution:

Only software-controlled caches, i.e. intermediate storage, will improve speed many times over. Through task parallelisation, as is common in modern software architectures, the still predominant approach of serialising hardware systems is broken up.

Caching and parallelisation in combination result in an enormous increase in performance. Increased performance rates by a factor of 10 can often be observed!

Remarkable: If desired, the existing storage hardware continues to be used in the background. This is enriched with intelligence and can thus be used much more efficiently.

As far as features are concerned, the software also plays to its strengths as a managing instance and fulfils these comprehensively. Because the listed functions have nothing to do with the hardware. For the SSD, SAS/SATA hard drive or cloud storage, the functional requirement is irrelevant, it is about the agile and dynamic control of data on data carriers, not the hardware per se.

Financial managers are faced with major challenges: Storage systems are very expensive to purchase and operate. Continuously, more budget is needed for storage expansions, as data growth knows only one direction: upwards. The dreaded vendor lock-in usually ties a company to a specific manufacturer for many years. An example: a normal hard drive tied to a specific manufacturer often costs three times as much as the same hard drive on the free market. If one has exceeded certain capacity requirements, it is not always done with an expansion. It is simply not possible or not allowed. One then buys a completely new, larger model of a storage cluster, regardless of the old hardware.

The solution:

Through the abstraction layer of storage already described, the boundaries of the physical units are removed and the dependence on individual manufacturers is completely broken. Capacities or hardware that are currently most cost-efficient can be purchased as needed at any time. Existing systems can simply continue to be used in the sense of investment protection, often longer than usual, because increased performance has been achieved. Preventive replacement out of fear of "old" hardware can also be postponed, thanks to the high availability feature.

Effects such as saved training & certification costs for technical staff also play a role.

Different licensing models become possible: one-off and recurring costs are linked to relevant and plannable key figures, such as the current hard disk consumption of the IT landscapes. This eliminates the need for performance and capacity calculations over years. Flexible rental options, even on a monthly billing basis and based on resources actually used, including hardware, are also conceivable. This would also make it possible to switch from capex to opex budgets.